Bad Data Can’t Stop Good People Analytics

Don’t let bad data keep you from good business insights. You don’t need perfect data to use people analytics to make business impact.

Does bad data keep you from trusting your people analytics insights? Let’s face it: your data will never be perfect. The way that records about people are created, maintained and adapted will always be prone to error. The process by which the data is entered introduces some of these errors. Other errors occur as the business evolves and new names, structures or categorizations get layered into the existing data set. Having implemented thousands of people analytics systems, we have never met an organization with perfect data, and we never expect to!

Your data will never be perfect (and that’s okay)

There are still a few CHROs who insist bad data is holding them back from progressing their people analytics function. However, this group is rapidly disappearing in the rearview mirror of modern HR practice. The pandemic proved once and for all that you can’t effectively run an enterprise without broad access to good information about the people that make up the business. In March of 2020, usage of our system spiked by 40% overnight as business leaders made thousands of decisions about how to deal with lockdown. Usage has not dropped off. Every week, more of our customers are rolling out people data to their total management population.

I hear you say “Hang on, if people data is imperfect, and yet people data is being broadly shared, how do you avoid being constantly challenged about the numbers?”

Reporting and analytics aren’t the same

The discipline of people analytics is focused on informing decisions, so that the business (and the people in it) thrive. This is distinct from the process of reporting, which focuses on a static record of results at a given point in time. Less experienced groups will fall into the trap of attempting to constantly align their reporting and their analytics work. The two disciplines are distinct, requiring different approaches to data management. Therefore, avoid the time-wasting trap of attempting to create perfect alignment.

Reporting and people analytics need to be distinct. Let’s look at an example why, using talent acquisition data. Many applicant tracking systems will overwrite the record for specific events or stages within the hiring process. A candidate in February might be a new hire in March. This means the record of candidates that got counted at the end of February has been overwritten with new data by the end of March. So any analytic process that is using data from the first three months of the year will not necessarily match the reporting that was produced at the end of February. The data that was counted in February may have ceased to exist in a way that means it can also be counted in March.

Storing data snapshots

This is also a good reason to pull applicant tracking system (ATS) data out daily and store it in an analytic application as a stream of historical events. This removes reliance on your ATS as the source of insight into your hiring performance. I recently spoke to a head of talent acquisition who relied on a key person to manually sift through all of the requisition and application records to complete or update gaps. This may be possible in a small organization, but it is unrealistic in any enterprise over 1000 people.

Reports are the best reflection of the current state at a point in time. Data is constantly evolving. Analytic processes will aim to use the most up to date information to inform decisions, rather than being locked to some historical reference point. This is why–in the example above–the reported number for February may not exactly match the February score included in the analysis. Small discrepancies do not invalidate the insight gained from the analysis, they are merely a reflection of the evolving nature of data and an appropriate level of pragmatism when it comes to balancing data quality against the importance of the decision being made.

Don’t mix data sources

Mandate which systems are the trusted source for specific elements of data. Many organizations waste countless hours attempting to match the records in their HRIS with those in their analytic application. There are several legitimate reasons why these numbers will vary slightly. The effort to maintain perfect alignment is an unnecessary time expense. Some of the causes of mismatch are simple. The HRIS is the place where records are updated, so there could be a lag between the record change in the source system and the information flowing through to the analytic system. Real-time alignment between these two systems is harmful. Measures like resignation rate—which use average headcount—would change every time the system adds a new record. Constantly shifting numbers erode user trust. Therefore, a daily batch update provides the balance between recency and the stability that analytic consumers need.

In addition, many HRIS systems attempt to provide calculations. However, these calculations lack a sound methodology. For example, an employee in an HRIS can be counted as both active and inactive at the same time—an inconsistency which damages the credibility of the data. These limitations in methodology, driven by the technical architecture, are another reason the HRIS is simply the place to manage people data transactions, and the analytics application is the place to understand the effects of those transactions. Attempting to blend across the two or use one for some and the other for some is introducing unnecessary confusion.

Expectations of people data

Set two core expectations across the business when it comes to using people data. Expectation 1: that the data used for people analytics work will not perfectly match prior reports of end of month information. Just like finance, people data often needs to be re-stated and the job of people analytics is to use the latest best, not try to retroactively work out what has changed to maintain alignment. Expectation 2: the transactional systems and the analytics application won’t perfectly align. Simple issues like time, methodology and overall system capabilities will lead to discrepancies between these two systems. Small differences do not invalidate making use of the data to inform decision making processes.

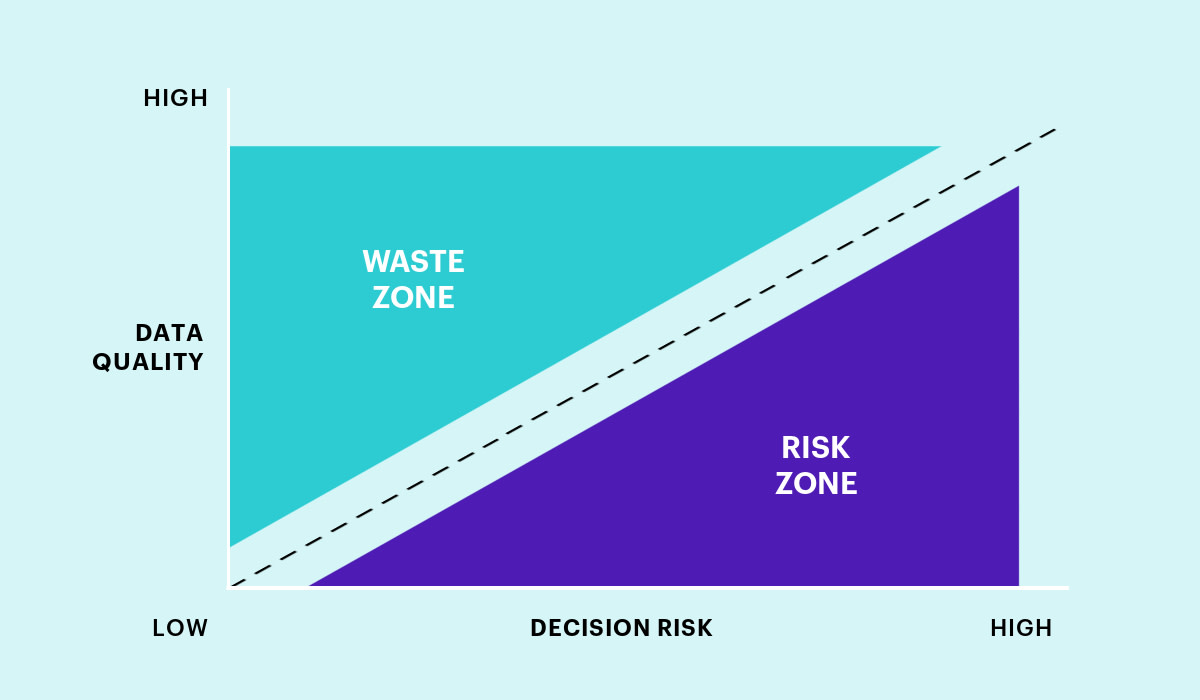

Balancing the risk of bad data

The matrix below explains the need to balance the risks of bad data and quality of decisions when it comes to the use of people data. The higher the risk associated with the people decision, the higher the need for associated data quality. No decision is ever perfect. Expecting perfect data is both unreasonable and unnecessary. Reporting on the quality of data fed into the decision making process is part of working within the risk/quality framework.

Balance the cost of high quality data against the risks of bad data

The goal is to stay out of the risk zone where decisions are being made on data that is of insufficient quality. And, stay out of the waste zone where too much time is invested in perfecting the data used to advance a low risk decision.

One simple way to gauge what is right in any given situation is to consider the alternative means that are available for decision making. For example, if there are three outcomes for any given decision, you have a 33% chance of guessing the best answer. Applying data that moves that likelihood to 40% or 50% puts you well ahead on where a guess would take you! This is a classic trap that I have seen often repeated: On the basis of a small discrepancy in the data—one or two incorrect items out of hundreds—a business leader will ignore the entire pattern or insight within the data, preferring instead to trust their intuition or guess. The alternative to leveraging data that is 95% correct is equivalent to rolling dice, which have a 1/6 chance of being correct. Highlighting this can help to build understanding and alignment around how people data should be used for decision making. Anyone opting to ignore the use of people data should be tasked with establishing why their chosen approach is better.

Building stakeholders

We hope by now to clarify the expectations that lead to the effective use of people intelligence to inform business decisions. Our final piece of advice: Rather than setting off on this quest as a lonely people analytics leader, surround yourself with a people data governance group who both formulate and communicate these expectations around the business.

This process has many many benefits. The primary benefit is having people from within different business functions who are involved in, and can vouch for, the quality and reliability of your team’s work. By involving the stakeholder beneficiaries, early in the process of creating the guidelines,you build connections who can advocate your message and establish trust across the business. It may seem like unnecessary work when you are mired in the challenges of data prep and visualization. From our experience, it is more than worth the time to ensure the stakeholders who need all of that careful and intelligent analysis actually apply it. Rather than getting caught in the perfection trap, you will make more progress by following this path: Set and communicate expectations across the business. This is an essential part of building a people analytics function.

Click here to book a demo.