Vee API Embedded Analytics Solution

Vee API Embedded Analytics Solution

Summary

Building a reliable, context-aware agent is hard. Different data models, inconsistent terminology, constant schema changes, and the need for secure, structured access all get in the way of great experiences. Building one that answers real HR and workforce questions—across dozens of customers, each with their own data structures and security rules—is a massive engineering challenge.

Since ChatGPT, many SaaS vendors delivered chatbots and time saving applications leveraging GenAI. These tools are often built on top of RAG frameworks, but these frameworks lack access to the reporting or analytics capabilities of the vendor.

This leaves decision-makers and admins of customers stuck going back to old, slow reporting and BI tools. Meanwhile, startups are innovating and customer expectations are surging due to the pace of innovation in consumer applications.

The Vee API gives your product team a foundation to build a high-performing agent without building everything from scratch. It delivers ready-to-query, permission-aware, semantically modelled data from multiple HR systems — so you can focus on the UX and logic of your agent, not plumbing infrastructure. Visier’s Vee API offers a low-lift way for product teams to address this gap, and help you deliver personalized intelligence, reports, and recommendations to your users.

Problems to be solved

Your users will ask questions that go beyond what an LLM alone can handle. These questions require structured data, security-aware role access, historical snapshots, standardized definitions, and smart aggregation. Some of the most common problems include:

Fragmented data models: Every HCM, ATS, payroll or other HR system has a different schema—and they all change over time. Most analytics pipelines break when that happens.

Unclear change logic: Trying to interpret change logs—like figuring out when a status actually transitioned from candidate to employee—is noisy work. LLMs can’t reason about this reliably without additional metadata, rules, or temporal context, which vary from system to system.

Permissions logic is painful: You can’t afford to get this wrong. Implementing user-, role-, and org-level permissions is non-negotiable. Every data response must be scoped to the requester’s role, team, and visibility. But most chatbots shortcut this, or leave it to developers to rebuild custom logic in every integration. It’s tedious and complex.

Jargon varies wildly: Different verticals and customers use different terms for the same thing—“SDR” vs. “Sales Rep,” “green belt” vs. “intermediate skills,” “talent pool” vs. “pipeline.” That inconsistency breaks natural language processing and often confuses generic LLMs. Without domain-aware semantics and normalization, answers are vague or misleading.

Natural language means a wide range of queries for data.: From querying lists of records, to aggregated numbers, to analyzing and explaining outcomes, users will throw all kinds of sophisticated questions to your agent. You do not want your agent to hallucinate or not be able to answer any question. Either way, you will break trust with users.

Delegate the right question to the right agent. To deliver a great user experience, your chat agent must answer all sorts of questions and handle all sorts of requests. The common solution to address this requirement is a federated model where your agent integrates with other AI applications and delegates the right task or question to the right agent.

Solution

By embedding Vee API, vendors can transform their chatbots and AI agents into intelligent, trusted decision-making tools saving you months upon months of development time that you can instead divert to other priorities. Vee API handles this complexity so you don’t have to. It provides a secure, scalable API layer that translates natural language into high-context, validated, and authorized queries over messy, real-world HR data with other benefits including:

Prebuilt metadata models: Vee understands HR concepts like “time to fill,” “offer acceptance rate,” “promotion velocity,” and more—so you don’t need to define these from scratch.

Semantic parsing with business context: Vee interprets questions in the context of real roles, teams, and workflows. It knows that "sales rep" in one org might be "account executive" in another. It standardizes messy terms across systems and customers with ready-to-use analytical objects (e.g., candidate, job, hire, req) for filtering, aggregations, and calculations

Clean, Chronological Event Stream: Every change is tracked and stored as an event. Data is merged and ordered across sources for accurate answers to time-specific questions

Dynamic join logic across systems: Vee stitches together data across ATS, HCM, job boards, and internal systems with join rules and filters already in place.

Built-in permission enforcement: Every request respects the user’s visibility and role-level permissions out of the box, no additional access logic needed.

No prompt engineering required: You don’t need to tune LLMs or manage vector stores. Just send the question to the API, and Vee returns structured insights.

How it works

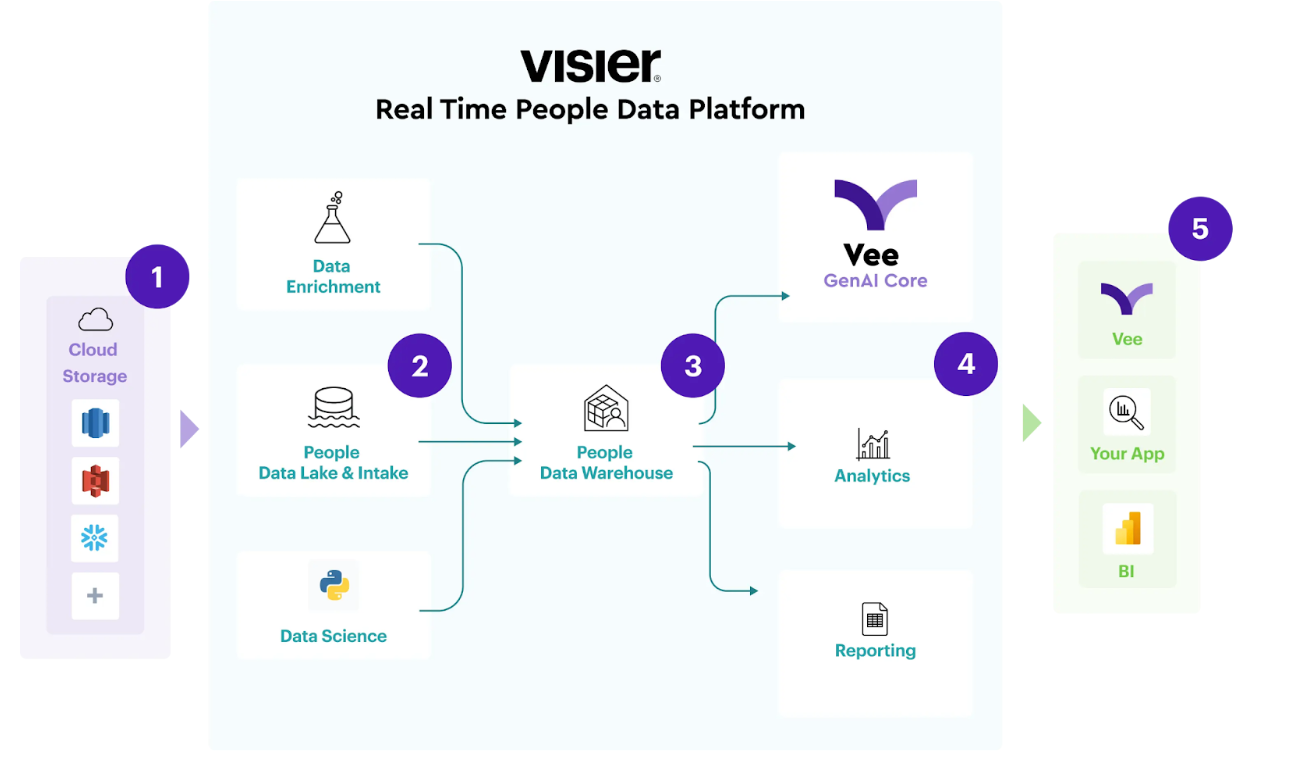

Vee isn’t just an LLM wrapper. It’s a composable analytics agent built for multi-tenant HR data applications. Here’s how it works:

Connectors pull data from systems (API, reports, exports).

Studio models the data, defines logic, and enforces business rules. Event stream engine tracks changes and builds a history.

Semantic layer applies standard definitions across sources. Parses user questions into structured intents with HR-specific context (e.g. "skills that drove" → driver analysis). Built on top of Visier’s HR-specific data model, ensuring consistent definitions across data. It recognizes synonyms, domain-specific language, and even ambiguous terms based on customer-specific metadata.

Every request is scoped to user permissions using a policy engine that enforces row-level and column-level security. Supports customer-specific org structures, hierarchies, and roles—without additional logic from you. Vee API exposes structured answers with filters, aggregations, and metadata context. Automatically determines which datasets to pull, how to join them, and what filters or aggregations to apply.

Your agent calls the Vee API to deliver rich, secure, and accurate answers — in your own UI. Returns structured outputs (aggregates, explanations, record lists) or formatted NL responses that can feed directly into your chatbot’s UI or a downstream application layer.

Want to see how it works? We built an internal demo app using Vee API to illustrate the power of embedding it into a typical HR tech product. Within minutes, we were able to:

Ask “Which departments have the highest attrition among high performers?”

Compare “Offer acceptance rates for junior vs senior roles in Canada”

Analyze “What drove time-to-fill improvements last quarter in Sales?”

All with zero custom queries, no manual joins, and complete user-level permission enforcement.

Screenshots

Solutions

Docs: Vee for Partners

Explore more about how you can use Vee with your application.

Docs: Vee API

Use this API to answer people questions in your own applications, such as an existing digital assistant. You can embed Vee's conversational functionalities anywhere and deliver instant answers to your users in plain language. You can integrate Vee into an existing digital assistant or your search functionality, or any other interface that your users interact with to answer their workforce questions. To use the Vee API, your team must build the embedding infrastructure to consume Vee through Visier APIs.